The PC; the personal computer; the IBM-compatible. Whatever you want to call it, somehow it has maintained a dominant presence for nearly four decades.

If you try to launch any program written from the ’80s to the 2000s onwards, you have a good chance of getting it to launch: your PC has backward compatibility going right back to the ’70s, enabling you to run pieces of history as though they were from yesterday.

In fact, your computer is brimming with heritage, from the way your motherboard is laid out to the size of your drive bays to the layout of your keyboard.

Flip through any PC magazine and you’ll see everything from bulky desktop computers to sleek business laptops; from expensive file servers to single-board devices only a few inches big.

- Here's our list of the best PCs you can buy

- We've also built a list of the best gaming PCs

- Check out our list of the best Linux desktops available

About this article

This article by John Knight was originally published as a two-part series in Linux Format issues 268 and 269.

Somehow, all these machines are part of the same PC family, and somehow they can all talk to each other.

But where did all of this start? That’s what we’ll be examining: from the development of the PC to its launch in the early ’80s, as it fought off giants such as Apple, as it was cloned by countless manufacturers, and as it eventually went 32-bit.

We’ll look at the ’90s and the start of the multimedia age, the war between the chip makers, and the establishment of Windows as the world’s leading but not best operating system.

But before we go anywhere, to understand the revolutionary nature of the PC you first need to grasp what IBM was at the time, and the culture that surrounded it.

Emergence of IBM

IBM was formed in the early 20th century by people who invented the kind of punch-card machines and tabulators that revolutionised the previous century. IBM introduced Big Data to the US government, with its equipment keeping track of millions of employment records in the 1930s.

It gave us magnetic swipe cards, the hard disk, the floppy disk and more. It would develop the first demonstration of AI, and be integral to NASA’s space programmes.

IBM has employed five Nobel Prize winners, six Turing Award recipients, and is one of the world’s largest employers.

IBM’s mainframe computers dominated the ’60s and ’70s, and that grip on the industry gave IBM an almost instant association with computers in the minds of American consumers.

But trouble was on the horizon. The late ’70s were saturated by ‘microcomputers’ from the likes of Apple, Commodore, Atari and Tandy. IBM was losing customers as giant mainframes made way for microcomputers.

IBM took years to develop anything, with endless layers of bureaucracy, testing every detail before releasing anything to market.

It was a long way from offering simple and (relatively) affordable desktop computers, and didn’t even have experience with retail stores.

Meanwhile, microcomputer manufacturers were developing new models in months, and there was no way IBM could keep up with traditional methods.

Assembling a crew

In August 1979, the heads of IBM met to discuss the growing threat of microcomputers, and its need to develop a personal computer in retaliation. They created a series of Independent Business Units, which were given a level of autonomy.

One of these would soon be led by executive Bill Lowe, who would become the father of the PC. In 1980, Lowe promised he could turn out a model within a year if he wasn’t constrained by IBM’s methods.

Lowe’s initial research led him to Atari, which was keen to work for IBM as an OEM builder, proposing a machine based on the Atari 800 line.

Lowe suggested IBM should acquire Atari, but it rejected the idea in favour of developing a new model instead.

This model was to be developed within the year, with Lowe given an independent team.

This new squad, the Dirty Dozen – a group of IBM misfits – was allowed to do things however they saw fit to get the job done. The task was code-named Project Chess, with Lowe promising a working prototype in 30 days.

Lowe went for an open architecture. While dealers were very interested in an IBM machine, it just wouldn’t work if they had to operate within IBM’s proprietary methods.

If dealers were going to repair these machines, they needed to be made from standard off-the-shelf parts.

By August, Lowe had a very basic prototype and a business plan that broke away from established IBM practice.

Based on this new open architecture, the PC would use standard components and software, instead of IBM parts, and be sold via normal retail channels.

Over the coming months, the Dirty Dozen grew exponentially in number and toiled away to transform the prototype into a world-class machine.

They focused on giving the PC an excellent keyboard, which they delivered with the IBM Model F. It needed to be durable and reliable, so each key was rated to 100 million keystrokes.

IBM was renowned for quality keyboards, and would try to replicate the feel of older beamspring terminal keyboards with a new Buckling Spring technology. These gave the keyboards the famous clacky sound and weighted feel that was popular with typists, giving a tactile feedback unrivaled at the time.

The PC’s keyboard alone would become the main selling point for a lot of customers, and IBM keyboards would be the best in the business for the next two decades. Next, the crew turned to the CPU.

IBM’s own 801 RISC processor was considered (which would have been significantly more powerful), but for convenience and compatibility’s sake, the team chose the Intel 8088.

By choosing an 8088 processor over the superior 8086, technically the original IBM PC is only partly 16-bit. Both are internally 16-bit, but the difference is the 8088 had a cost-saving 8-bit bus.

The simplified 8088 cost less, could be produced in higher quantities and reduced motherboard complexity – while a lot of the hardware likely to be used in the PC also had an 8-bit bus, so an 8088 would be better for compatibility.

As for the motherboard, RAM would be expandable up to 256KB, an optional 8087 maths co-processor would be available, and there would be five ISA expansion slots.

Putting the machine together, launch models would have a choice of 16 or 64KB of RAM, space for two 5.25-inch floppy disk drives and a cassette jack for tape storage.

Buyers had a choice of monochrome or CGA graphics, and the Intel 8088 powering the system would be running at 4.77MHz.

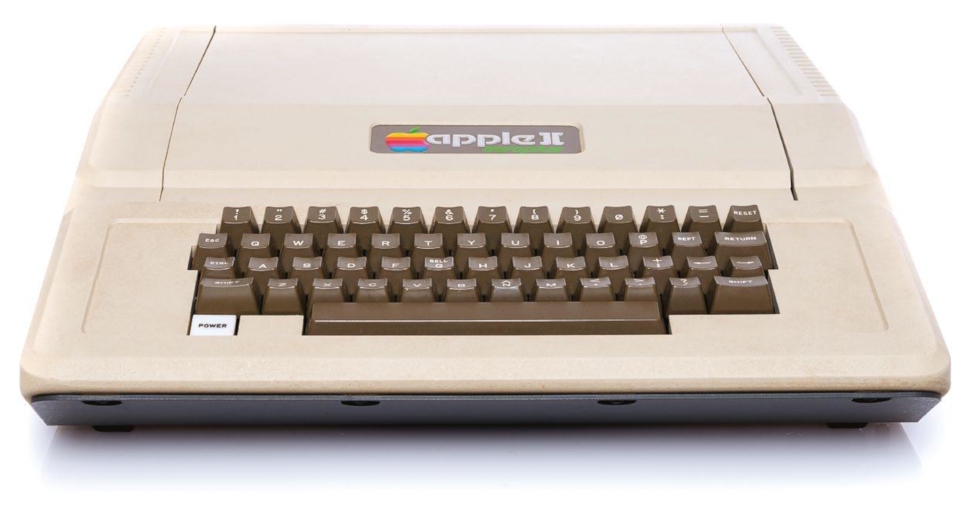

Apple II: The Great Rival

Without the Apple II, it’s possible the PC would never have existed. Although a subject of debate, it’s generally agreed that the Apple II was a primary influence on the PC. Many IBM engineers owned one – as did many customers, who found that they could easily do jobs such as working on spreadsheets that were nearly impossible on a giant mainframe.

Sporting an 8-bit MOS 6502 processor, with between 4KB and 64KB of RAM, it debuted in June 1977 for a base price of $1,298. It had an incredible production run, selling from 1977 to 1993.

Developed by Steve Wozniak, the Apple II stands in stark contrast to products developed by Apple’s lesser Steve (Mr Jobs).

Chiefly, the Apple II is designed around an open architecture, with a removable lid allowing easy access to the motherboard and expansion slots. Much like the PC, the Apple II would be the subject of numerous clones over the years.

Even though the PC was newer, the Apple II retained advantages over the PC, such as having eight expansion slots over the PC’s five, and much more convenient gaming, with bundled joysticks and games that loaded in seconds.

On to the software

With the hardware sorted, the burden of developing the operating system was largely outsourced to Microsoft, with IBM offering consumers the joint-venture PC DOS.

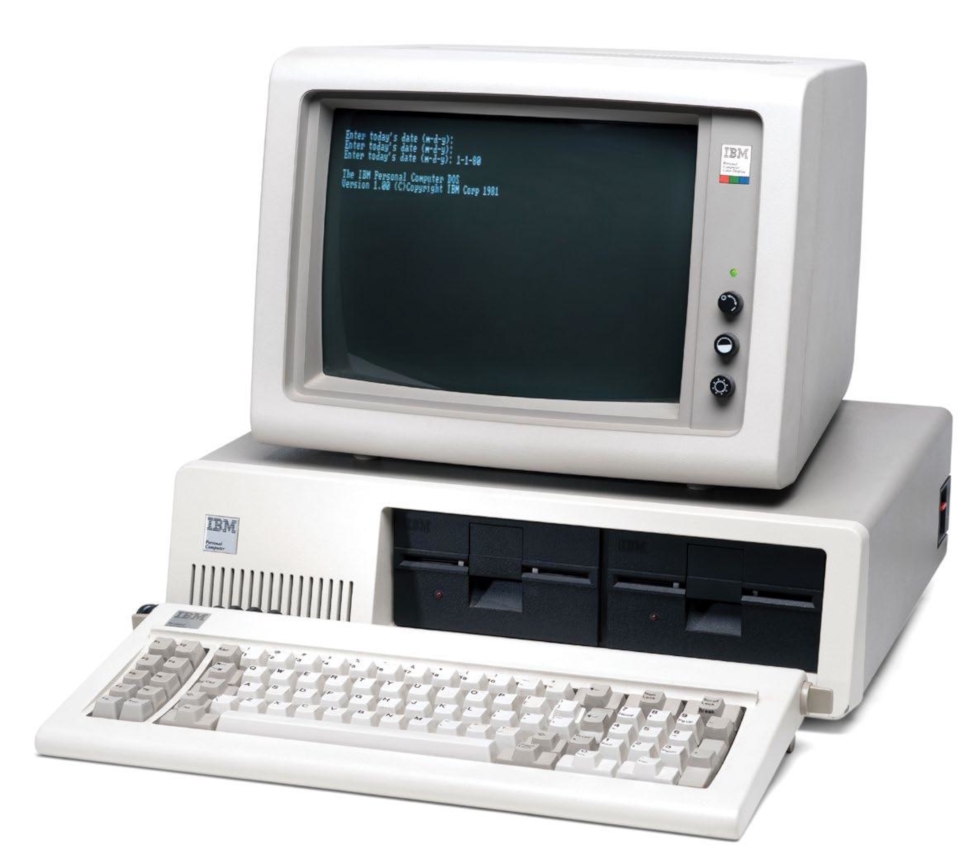

The final machine was dubbed the IBM Model Number 5150. This moniker would be immediately forgotten, for in the minds of the press it was really IBM’s Personal Computer that was about to launch.

After a 12-month development, IBM announced its new Personal Computer on 12 August 1981. The $1,565 base model included 16KB of RAM, CGA graphics and an input jack, relying on the user to provide a cassette deck – disk drives were optional and far more expensive than tape.

Rivals such as RadioShack and Apple were unconcerned. Steve Jobs bought one to dissect and was unimpressed by some of its old-fashioned tech.

In its hubris, Apple took out a full-page ad proclaiming “Welcome, IBM. Seriously.” But it failed to recognise the weight a company like IBM carried with businesses.

Even though IBM’s product was inferior in many ways to its cheaper competitors, businesses saw IBM as a safe bet, with excellent customer support.

Within a year, the PC overtook the Apple II as the best-selling desktop computer. In 1983, two thirds of corporate customers standardised on the PC as their computer of choice, with only nine percent choosing Apple – and by 1984, the PC’s annual revenue had doubled Apple’s.

IBM surprised the industry by breaking its own traditions. Not only did it allow service training for non-IBM personnel, but it published the PC’s tech specs and schematics to encourage third-party peripherals and software.

Within a couple of years, the PC was the new standard for desktop computers, spawning a massive sub-industry of peripherals and expansions.

In 1982, the PC was updated to IBM’s XT (eXtended Technology) standard, removing the cassette jack and adding a 10MB hard disk. It was the first PC with a hard disk as standard.

August 1984 brought IBM’s next major release, the PC/AT (Advanced Technology). Sporting a 6MHz Intel 80286 (aka 286 – no one used the ‘80’ prefix any more), it came with 256KB of RAM, expandable up to 16MB.

Initial models were limited to CGA and monochrome, but IBM’s new 16-colour EGA standard was soon introduced, allowing for 16 colours at 640x350. This was another step toward the PC we recognize now, with things like standardised drive bays, motherboard mounting points and the basic keyboard layout we now take for granted.

Although a hit with businesses, the first PC was too expensive for home users. The base model’s price wasn’t too outlandish, but it didn’t include a monitor or floppy drive; a decent 64KB model with a floppy drive and monitor was more than $3,000 (over $8,000 in today’s money).

Rivals smelled opportunity, and with an open architecture, it wouldn’t be long before IBM clones would arrive.

The PCjr

The PCjr looked promising: an Intel 8088 CPU, CGA Plus graphics and the kind of sound chips used by Sega consoles. IBM promised a home machine with PC compatibility, improved graphics and sound and a lower price of $1,269. Consumers adored the wireless keyboard, and it was IBM, the king of computing. Pundits thought the PCjr would destroy the competition, but on release it was universally panned.

A Commodore 64 was a third of the price, faster, with better graphics, and a huge software library. The PCjr’s strange hardware and optimisations also meant it was only partially PC-compatible, failing gamers and business users alike. What really riled consumers was the appalling rubber chiclet keyboard: a relatively expensive computer – from a company known for quality keyboards – was lumbered with something found on $99 budget micros.

Initial sales were a disaster, but a campaign of discounts, ads and upgrades (particularly to the keyboard) turned things around, making the PCjr a mild success. Nevertheless, its reputation was damaged, and it was cancelled in 1985.

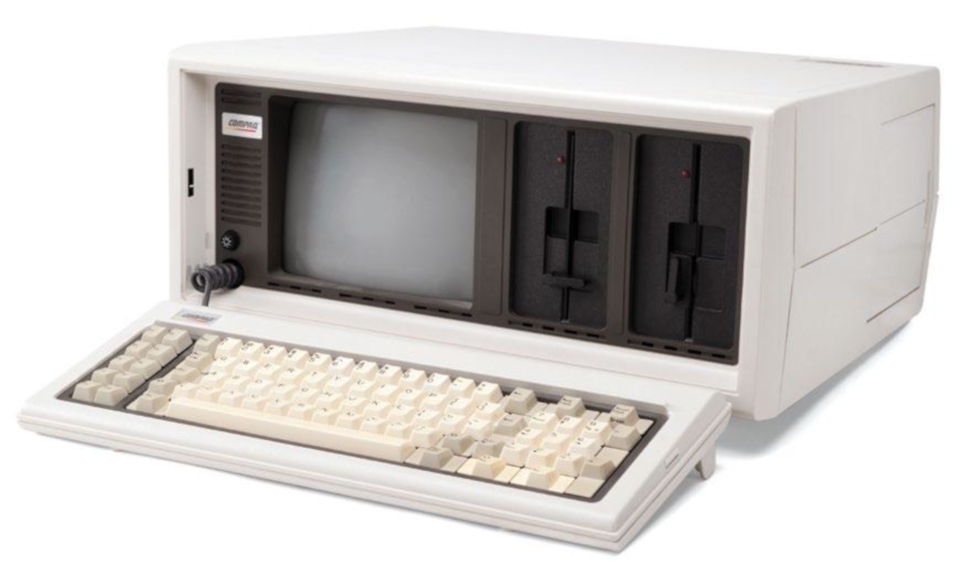

The rise of the clones

Initially IBM wasn’t concerned. While a PC could be mostly replicated with retail parts, the BIOS belonged to IBM, which guaranteed proper IBM compatibility.

However, companies such as Award and American Megatrends reverse-engineered IBM’s BIOS, and companies such as Dell, Compaq and HP then used cloned BIOSes to build clone machines.

The first clone came from Columbia Data Products with 1982’s MPC 1600, but 1983 saw the landmark Compaq Portable, the first computer to be almost fully IBM compatible.

Compaq used its own BIOS and provided a very different form factor to a desktop PC, with all the components in one box, including a small CRT monitor.

When IBM released its ill-fated budget PCjr in 1984, RadioShack made a clone, the Tandy 1000. It was far more successful than the PCjr, with better PC compatibility.

After the PCjr’s cancelation, existing software and peripherals came to be associated with the Tandy. Far cheaper clones were eroding IBM’s control of the market, with its share dropping from 76 percent in 1983 to 26 percent in 1986.

Enter the 386

At least IBM had the technological lead, but even that would be eroded when Compaq released 1986’s Deskpro 386. Intel had recently released its 32-bit 80386 CPU, but unfortunately for IBM, Compaq beat it to market with a 386 machine boasting 1MB of RAM and MS-DOS 3.1.

This was two to five times faster than a 286, with a base price of $6,500. Compaq’s machines were the very top of the line, and would steal IBM’s title of business leader.

IBM fought back with 1987’s Personal System/2 (PS/2), finally releasing a 386 to market; the most powerful model sported a 20MHz CPU, 2MB of RAM and a 115MB hard disk.

This was a landmark computer, standardising on things such as a 1.44MB 3.5-inch floppy, and the PS/2 ports still used by mice and keyboards.

However, the biggest leap was in the introduction of VGA graphics. On the desktop, this meant 640x480 in 16 colours, and a low-res mode of 320x200 in 256 colours, popular for gaming.

Despite the incredible advances, IBM continued to lose ground to the clones. Although the PS/2 line sold well for a time, IBM’s machines were still too expensive for the general public.

As the ’80s progressed, the name ‘PC’ started losing its association with IBM, and people started referring instead to ‘IBM-compatibles’.

Although the PC was sweeping America, in many regions worldwide micros were still wildly popular; Europe was particularly enamoured with the Atari ST and Commodore Amiga.

Where PCs were lacking in the GUI stakes, these Motorola 68000-based machines already had sophisticated GUIs and astonishing multimedia capabilities that would trounce PCs for some years – often at a fraction of the cost.

Nevertheless, the PC continued to grow and develop, with further advancements such as 800x600 SVGA (Super VGA) graphics in 1988.

And the ’80s had one last trick up their sleeve: in April 1989, Intel released the 486, the powerhouse CPU that would kickstart the next decade.

The first computer to ship was IBM’s 486/25 Power Platform in October, the most powerful machine on the market. We entered the decade with 8-bit micros and left with full 32-bit processors and SVGA graphics.

It’s unlikely such rapid progress will be repeated.

32-bit OS Wars

There’s no doubt that Windows is synonymous with the PC.

Ask most people what runs on a PC and that’s what they’re going to say. It’s not surprising because, as we’ll see, the “WinTel” conglomeration releases updates of processors and software practically in lock-step.

But then why wouldn’t it? New software features require faster hardware, and faster hardware requires more demanding software to drive upgrades. It’s a virtuous consumer cycle.

Yet while Microsoft was embracing and extending its software range, there was another project getting off the ground. It started off from the humble beginnings of running a student’s i386 PC, eventually going on to power the fastest computers on the planet.

But how could a ragtag collection of developers that included a Finnish student and some Californian academic hippies create an ecosystem that would challenge Microsoft?

Let’s find out!

We’ll be discussing the major changes in the consumer and business world here, but we have the timeline at the end of the article with Linux and open source developments, so you can see how it all matches up.

The first versions of Windows were unsuccessful, but with 1990’s Windows 3.0, the PC desktop was seen as a viable alternative to the Macintosh and Amiga. Windows 3.0 had a new interface, “multitasking” abilities, and mouse-driven productivity suites that freed users from the command line.

Meanwhile, IBM’s OS/2 had been trying to establish itself as the respectable GUI for corporate America. By 1990, the alliance between IBM and Microsoft had essentially finished, with the two becoming rivals.

Although newer versions of OS/2 would be more advanced, for now Microsoft had the technological advantage. IBM was still hampered by 286 machines, keeping OS/2 primarily 16-bit and thus unable to make use of the 386’s advanced features.

April 1992 finally saw OS/2 become 32-bit. In most ways, it was superior, with extensions to DOS, and Windows 3.x support in a stable environment.

But while Windows targeted clone machines, OS/2 targeted IBM hardware, so it couldn’t run on many clones where Windows ran perfectly. Furthermore, while IBM sold OS/2 as a separate product, Microsoft bundled Windows with new PCs.

Microsoft’s dominance started with Windows for Workgroups 3.11 in August 1993. It had new 32-bit capabilities and proper networking.

It devoured the business space, and 3.11 would be the environment many people grew up with. Around this time Debian and Slackware are released.

The multimedia age

In the mid-90s, every PC had a soundcard, a CD-ROM drive and a tinny set of multimedia speakers. CD-ROM’s 650MB of storage made possible more expansive gaming, with video cut-scenes and CD-audio soundtracks. Schools bought edutainment packages with archived video and interactivity.

By now, the 486 was standard. Although 386s were still functional business machines, you needed a 486 to enjoy “multimedia”. Thankfully, hardware prices fell dramatically; while 80s PCs usually had Intel CPUs, rival manufacturers were on the ascent and lowering costs.

Although AMD CPUs were often from a previous generation to Intel’s, its chips were more efficient and enabled higher clock speeds, giving similar performance at much lower prices.

Cyrix was making a name for itself with 486-upgrade processors, providing a cheap upgrade route for 386 owners with a new CPU in their old motherboard.

1993’s Intel Pentium brought the next generation of CPUs. Intel dropped the “86” to differentiate itself from other manufacturers, with “Pent” coming from the Greek “penta,” meaning five (implying a 586 without saying it). The Pentium gave almost twice the performance per clock cycle as the 486, but early Pentiums were only 50-66MHz. Meanwhile, AMD was pumping out insanely overclocked 486s, such as the DX4-120 running at 120MHz, nearly matching early Pentiums. AMD’s strong performance and low prices attracted manufacturers such as Acer and Compaq, whereas Cyrix’s efficient designs caught IBM’s eye, starting a partnership in 1994.

1995 saw the introduction of the ATX standard we use today, defining new mounting placements and features like automatic shutdowns. Unlike XT and AT, this change was brought by Intel instead of IBM.

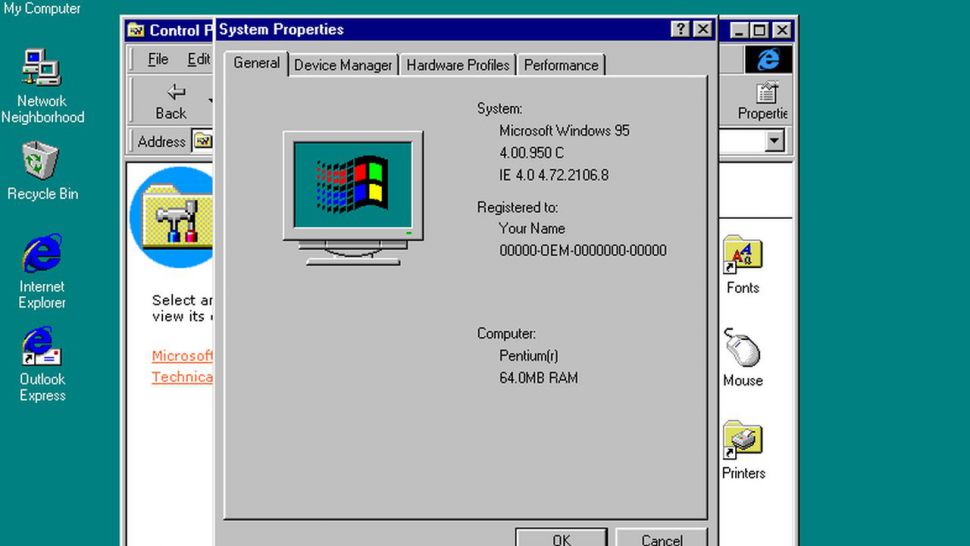

Windows 95 makes its debut

August 1995 would see the biggest change to the computing landscape (RedHat was formed?‐Ed) yet: Windows 95. On the technical side, Windows 95 was designed around 32-bit preemptive multitasking, compatibility with existing DOS and Windows 3.x programs, and new tech such as DirectX, and Plug and Play (Pray–Ed) support.

Windows 95 truly established Microsoft. Computing had become mainstream and Microsoft was a household name. It was over for competitors: Commodore had gone bankrupt, Atari hit the wall and Apple was barely surviving.

IBM still had OS/2, with its newer Warp release from a year prior, but this only supported Win 3.x applications and sank into irrelevancy.

When Windows 98 arrived it fixed many of the teething problems of Windows 95, with a more stable system, better hardware support and UI enhancements.

This was also when the anti-trust lawsuits began, as Microsoft bundled Internet Explorer with Windows, itself already bundled with new computers.

Now Microsoft would dominate not just PCs, but internet use too. 3D accelerator cards – such as 3dfx’s Voodoo 2, Nvidia’s Riva TNT, and ATI’s Rage series – would be a defining feature of the late 90s. 3D acceleration brought a new era of PC gaming.

Where previous games relied on the CPU for all rendering, these new graphics cards added a GPU (graphics processing unit), which took the graphical processing burden away from the CPU. This resulted in substantially faster gaming and stunning graphical effects.

Although 3dfx tried to corner the market with its proprietary Glide API, it eventually lost out to competitors who used market standards such as DirectX and Silicon Graphics’s OpenGL. The ultimate card of the 90s would be 1999’s Nvidia GeForce 256. AMD went from strength to strength.

In 1996, AMD released the K5, the first Pentium rival, but 1997 brought true success with the K6. This was a proper rival to the new Pentium II, but could work in older Socket 7 motherboards, too. The K6 series was wildly successful, with its famous 3DNow! instructions and cheaper prices.

The successive K6-2 and K6-3 chips continued to rival advancing Pentium II and III models, and would eventually dominate most of the sub-$1,000 market. We would end the decade with 1999’s K7 Athlon, the first retail CPU to break the 1GHz mark.

The 90s were a time of survival of the fittest, ending with one dominant OS and two CPU makers. Even the GPU market was contracting to just Nvidia and ATI.

In addition to Linux Format launching, the precursor to the Linux Foundation being formed and Corel Linux, the new century would start with Windows 2000 (arguably the best release of Windows) and Windows Me (arguably the worst).

Windows 2000 was based on Microsoft’s NT platform, finally moving Windows away from DOS, while remaining mostly backward compatible with Windows 9x and DOS programs.

October 2001 saw the release of Windows XP, using the same NT base as 2000, with a revamped interface, and improved multimedia capabilities. While previous versions of Windows were pretty drab, XP was colourful.

XP made piracy much harder, being the first Windows to have an activation scheme. The mix of relative stability and a friendly GUI made Windows XP one of the most popular OSes of all time.

Linux developers take the desktop more seriously and Ubuntu is released 2004 and people start to say “year of the Linux desktop”!

Dawn of the 32-bit age

It’s difficult to overstate the 386’s importance. In short, the 32-bit 386 is where modern computing began.

List the features of a modern OS and for the PC these abilities started with the 386, serving as the basis and minimum spec for the next generation of OSes in the ‘90s.

With the 386, PC operating systems immediately became more advanced, with a flood of Unix variants being ported to the platform.

Advanced computing was previously dominated by expensive Unix workstations, but once the PC went 32-bit they grew redundant and big Unix companies such as DEC and Sun Microsystems started falling away. Until late 2012, a 386 could still run Linux (now it requires a decadent 486).

The 64-bit age

In April 2003, AMD released its 64-bit Opteron processor. This was the first major change to the x86 platform not made by Intel, and would be labelled either x86-64 or, embarrassingly for Intel, AMD64.

Intel was forced into the position of modifying its processors for software compatibility with AMD’s new specification. Intel had banked on its Itanium IA-64 EPIC architecture taking off, but it was expensive and offered no performance advantage over RISC or AMD64.

In May 2005, IBM abandoned the PC it had created, selling its PC division to Lenovo in a deal worth nearly $2 billion.

Scepticism was high over the viability of such a merger but Lenovo went on to become the biggest PC vendor in the world, while IBM would focus on big-data markets and whatever “the cloud” is.

In June 2005, Apple announced that Macs would switch from PowerPC to x86 processors.

Steve Jobs was disappointed in the progress of PowerPC CPUs, which were slower than Apple had promised consumers, too hot for laptops, and consumed too much power (that sounds familiar – Ed).

Between July and October 2006, AMD bought out graphics company ATI Technologies in a deal worth $5.6 billion.

Merging ATI’s graphics tech with its existing CPU know-how, AMD was now taking on the might of both Intel and Nvidia with the manufacturing strength of combined technologies.

What about BeOS?

Be Inc. (founded by ex-Apple executive Frenchman Jean-Louis Gassée) launched the BeBox in October 1995, running its own operating system, BeOS. Optimised around multimedia performance for the masses, BeOS was intended to compete with both Mac OS and Windows.

The OS was lightning fast and free of the legacies of old 16-bit hardware, with features such as symmetric multi-processing for multi-CPU machines, pre-emptive multitasking, and the 64-bit journaled file system BFS.

Although the BeBox itself was unsuccessful, BeOS was ported to the Macintosh in 1996, and almost became the new system to replace Mac OS. Gassée’s $300 million asking price was too steep, however, and Apple went with Steve Jobs’s NeXTSTEP OS instead.

BeOS was then ported to the PC in 1998, along with a free stripped-down BeOS 5.0 Personal Edition, but it failed to gain more than a niche audience (Microsoft may also have worked against its adoption). Be Inc. was bought out by Palm Inc. in 2001.

Despite numerous recreations, BeOS is now survived by Haiku, a popular open-source re-implementation with BeOS binary compatibility on 32-bit versions.

The open source age

In January 2007, Windows Vista was released, it was designed to be more secure with an updated GUI using effects like window translucency, but it was savaged by the press. Windows Vista had bad backwards compatibility, long load times, driver issues and a stream of invasive warning messages.

Windows 7 arrived in July 2009. Based on the same platform as Vista, it refined the codebase, bringing performance improvements, improved stability and a sensible interface.

Windows 7 would become the fastest selling OS in history, and around a third of PCs still use it. This, despite Microsoft ending support for the venerable OS at the start of 2020.

In July 2012, Google’s Chrome browser overtook Internet Explorer in usage share, and by April 2013 both Chrome and Firefox had a greater share of users than Internet Explorer, ending Microsoft’s dominance of the browser market.

In October 2012, Windows 8 was released. Despite Microsoft’s attempts to innovate, Windows 8 was critically savaged. Because mobile devices were overtaking traditional desktops, Windows 8 tried to have more of a “touch” interface, removing the “Start” button, and switching to a tile-based design.

The result was dreadful. Windows 8.1 addressed many of its criticisms, chiefly by bringing back the “Start” button and enabling users to boot a traditional desktop. But again, the damage was done. While Windows 7 is still in wide use, Windows 8 is almost forgotten.

Taking Microsoft through to the end of the decade Windows 10 was released July 2015 to a mixed reception. A more functional interface blended Windows 7’s traditional GUI and Windows 8’s tile system, and Windows finally has virtual desktops – something that’s been a part of Linux for decades.

On the downside, forced system updates infuriate users, the Start menu is advert-bloated, there’s a worrying amount of data collection and the Microsoft Store undermines the open nature of the PC platform.

Even so, Microsoft still dominates the consumer PC, but is no longer a monopoly. Apple has spent most of the decade wealthier than Microsoft. Linux has crept into everything from DVD players to the world’s supercomputers and a 3% desktop share.

Microsoft has gone from calling Linux “a cancer” to proclaiming “Microsoft loves Linux,” shipping Windows with a Linux kernel, and running its own Azure Sphere Linux distribution, basing its Edge browser on the Chromium project and releasing various projects as open source.

To focus on the PC, it isn’t the dominant format that it once was. IBM has long since left the market it created, and wisely so. Computing is more varied and takes many forms, from iPads to smartphones, from Chromebooks to weird Android devices that no one can categorise without consulting a dictionary.

Computing has returned to a level of diversity similar to that of the 80s, but the PC no longer has the same supremacy – and nor do the giants that established it, such as IBM, Intel and Microsoft.

Linux Timeline

25 AUGUST 1991 - Linus announces on comp.os.minix

Linus Torvalds, a 21-year-old student at the University of Helsinki, Finland, starts toying with the idea of creating his own clone of the Minix OS.

17 SEPTEMBER 1991 - v0.01 Posted on ftp.funet.fi

This release includes Bash v1.08 and GCC v1.40. At this time, the source-only OS is free of any Minix code and has a multi-threaded file system.

NOVEMBER 1991 - v0.10 Linux is self-building

Linus overwrites critical parts of his Minix partition. Since he couldn’t boot into Minix, he decided to write the programs to compile Linux under itself.

5 JANUARY 1992 - v0.12 GPL license

Linux originally had its own licence to restrict commercial activity. Linus switches to GPL with this release.

7 MARCH 1992 - v0.95 X windows

A hacker named Orest Zborowski ports X Windows to Linux.

14 MARCH 1994 - v1.0.0 C++ compiled

The first production release. Linus had been overly optimistic in naming v0.95 and it took about two years to get version 1.0 out the door.

7 MARCH 1995 - v1.2.0 Linux ‘95

Portability is one of the first issues to be addressed and this version gains support for computers using processors based on the Alpha, SPARC and MIPS architectures.

9 JUNE 1996 - v2.0.0 SMP support

Symmetric multiprocessing (SMP) is added, which made it a serious contender for many companies.

20 FEBRUARY 2002 - v2.5.5 64-bit CPUs

The first to support AMD 64-bit (x86-64) and PowerPC 64-bit.

17 DECEMBER 2003 - v2.6.0 The beaver detox

Major overhaul to Loadable Kernel Modules (LKM). Improved performance for enterprise-class hardware, the Virtual Memory subsystem, the CPU scheduler and the I/O scheduler.

29 NOVEMBER 2006 - v2.6.19 ext4

Experimental support for the ext4 filesystem.

5 FEBRUARY 2007 - v2.6.20 KVM arrives

Kernel-based Virtual Machine (KVM) is merged, adding Intel and AMD hardware virtualisation extensions.

25 DECEMBER 2008 - v2.6.28 Graphics rewrite

The Linux graphics stack was fully updated to ensure it utilised the full power of modern GPUs.

21 JULY 2011 - v3.0 20-years young

This version bump is not about major technological changes, but instead marks the kernel’s 20th anniversary.

18 MARCH 2012 - v3.3 EFI Boot support

An EFI boot stub enables an x86 bzImage to be loaded and executed directly by EFI firmware.

12 APRIL 2015 - v4.0 Hurr Durr I’ma Sheep released

Linus Torvalds decides to poll the decision to increment the next release to 4.x. It also approved the name.

MARCH 2019 - v5.0 Shy Crocodile

Major additions include: WireGuard, USB 4, 2038 fix, Spectre fixes, RISC-V support, exFAT, AMDGPU and so much more!

2021… -- v6+ The future…

Who knows what the next 25 years has in store for Tux...

Comments

Post a Comment